Learning with SGD and Random Features

Résumé

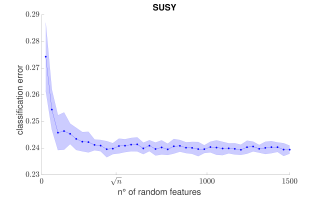

Sketching and stochastic gradient methods are arguably the most common techniques to derive efficient large scale learning algorithms. In this paper, we investigate their application in the context of nonparametric statistical learning. More precisely, we study the estimator defined by stochastic gradient with mini batches and random features. The latter can be seen as form of nonlinear sketching and used to define approximate kernel methods. The considered estimator is not explicitly penalized/constrained and regularization is implicit. Indeed, our study highlights how different parameters, such as number of features, iterations, step-size and mini-batch size control the learning properties of the solutions. We do this by deriving optimal finite sample bounds, under standard assumptions. The obtained results are corroborated and illustrated by numerical experiments.

Domaines

Apprentissage [cs.LG]

Fichier principal

imgs/RFroofSUSY.pdf (26.21 Ko)

Télécharger le fichier

05_experiments.tex (4.77 Ko)

Télécharger le fichier

SGM_with_Random_Features.pdf (697.33 Ko)

Télécharger le fichier

imgs/MBssHIGGS.pdf (57 Ko)

Télécharger le fichier

imgs/MBssSUSY.pdf (39.2 Ko)

Télécharger le fichier

imgs/RFroofHIGGS.pdf (30.5 Ko)

Télécharger le fichier

imgs/RFroofSUSY.pdf (26.21 Ko)

Télécharger le fichier

05_experiments.tex (4.77 Ko)

Télécharger le fichier

SGM_with_Random_Features.pdf (697.33 Ko)

Télécharger le fichier

imgs/MBssHIGGS.pdf (57 Ko)

Télécharger le fichier

imgs/MBssSUSY.pdf (39.2 Ko)

Télécharger le fichier

imgs/RFroofHIGGS.pdf (30.5 Ko)

Télécharger le fichier

Origine : Fichiers produits par l'(les) auteur(s)